The award-winning WIRED UK Podcast with James Temperton and the rest of the team. Listen every week for the an informed and entertaining rundown of latest technology, science, business and culture news. New episodes every Friday.

…

continue reading

A tartalmat a LessWrong biztosítja. Az összes podcast-tartalmat, beleértve az epizódokat, grafikákat és podcast-leírásokat, közvetlenül a LessWrong vagy a podcast platform partnere tölti fel és biztosítja. Ha úgy gondolja, hogy valaki az Ön engedélye nélkül használja fel a szerzői joggal védett művét, kövesse az itt leírt folyamatot https://hu.player.fm/legal.

Player FM - Podcast alkalmazás

Lépjen offline állapotba az Player FM alkalmazással!

Lépjen offline állapotba az Player FM alkalmazással!

“New Report: An International Agreement to Prevent the Premature Creation of Artificial Superintelligence” by Aaron_Scher, David Abecassis, Brian Abeyta, peterbarnett

Manage episode 520129750 series 3364760

A tartalmat a LessWrong biztosítja. Az összes podcast-tartalmat, beleértve az epizódokat, grafikákat és podcast-leírásokat, közvetlenül a LessWrong vagy a podcast platform partnere tölti fel és biztosítja. Ha úgy gondolja, hogy valaki az Ön engedélye nélkül használja fel a szerzői joggal védett művét, kövesse az itt leírt folyamatot https://hu.player.fm/legal.

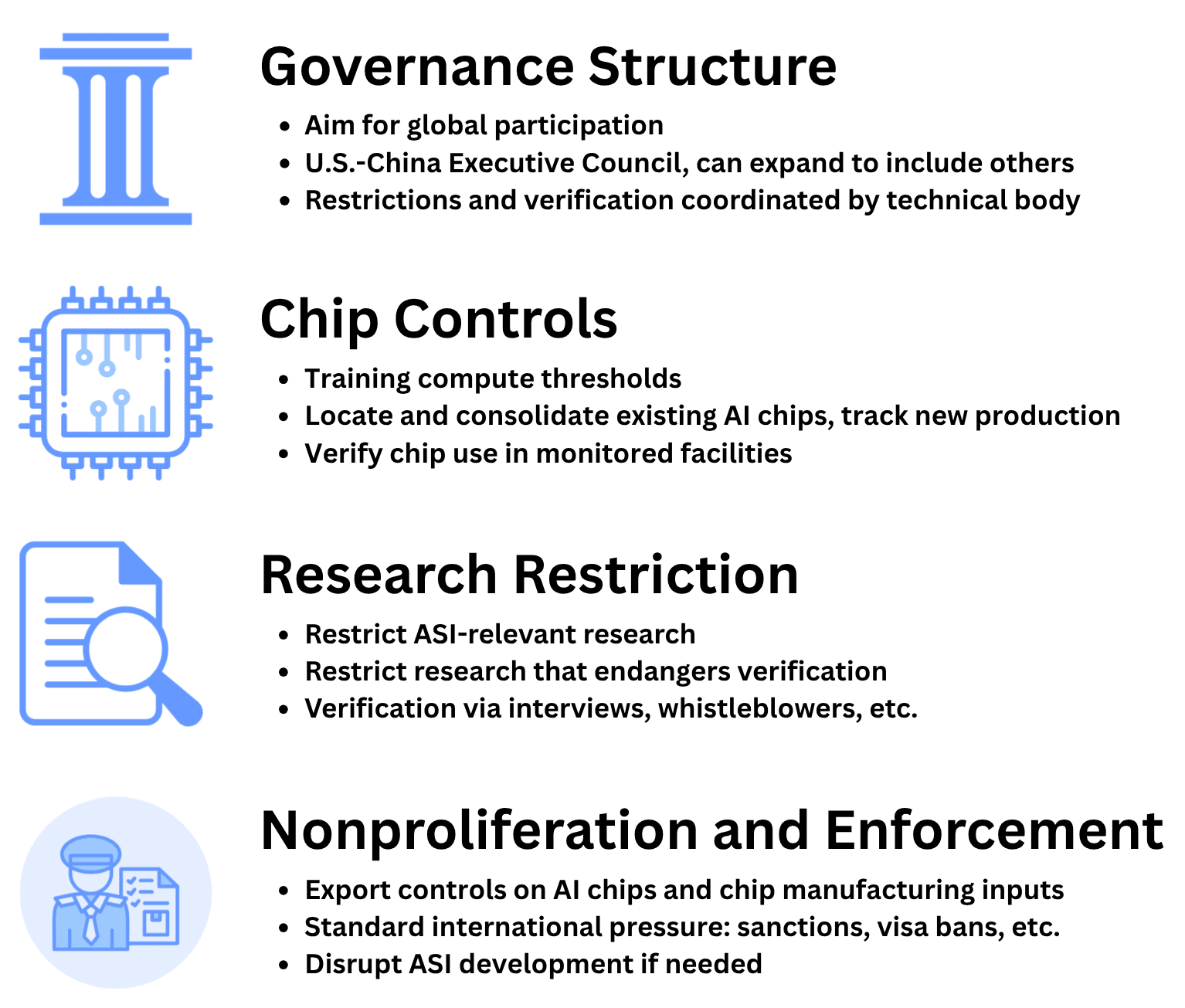

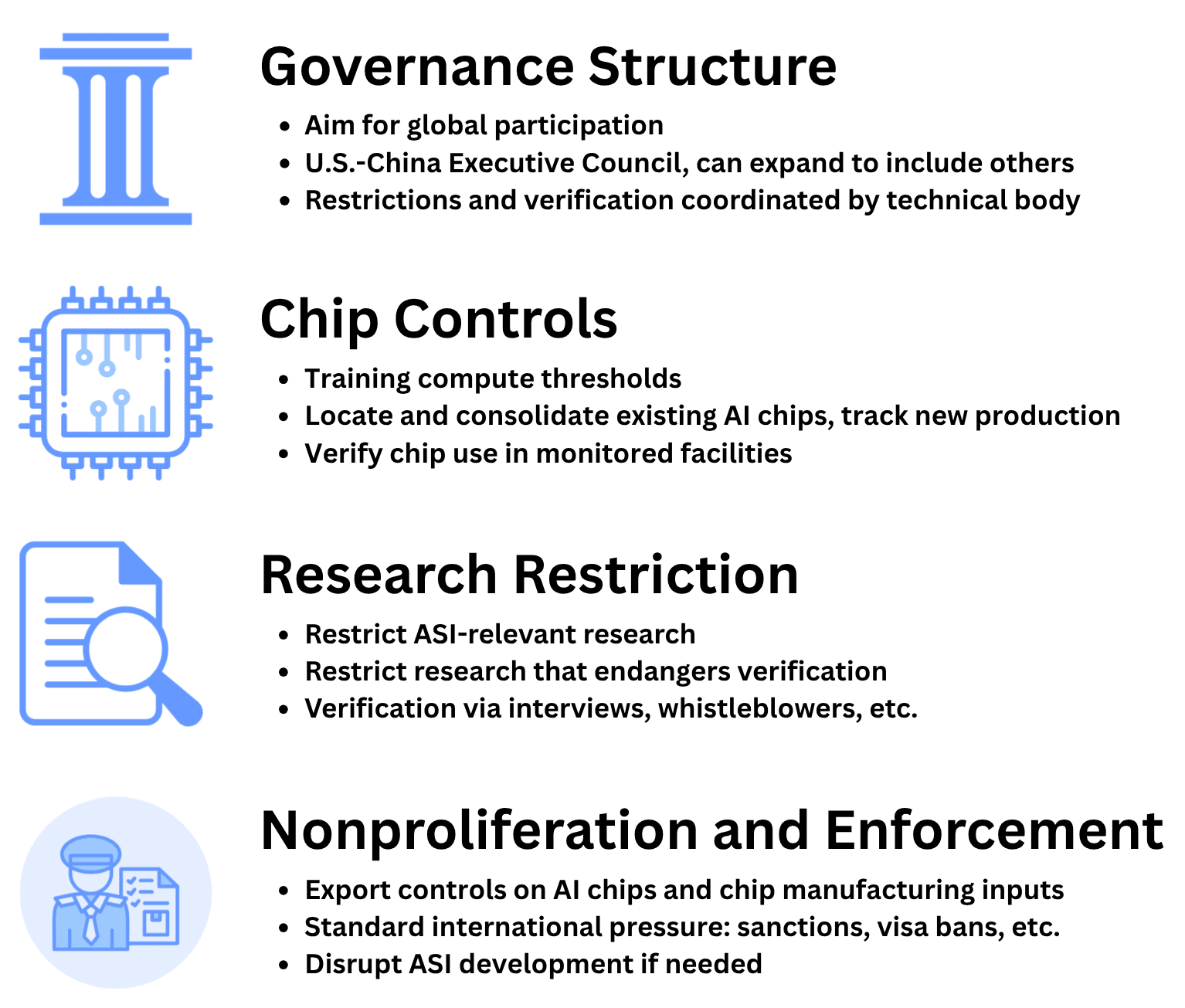

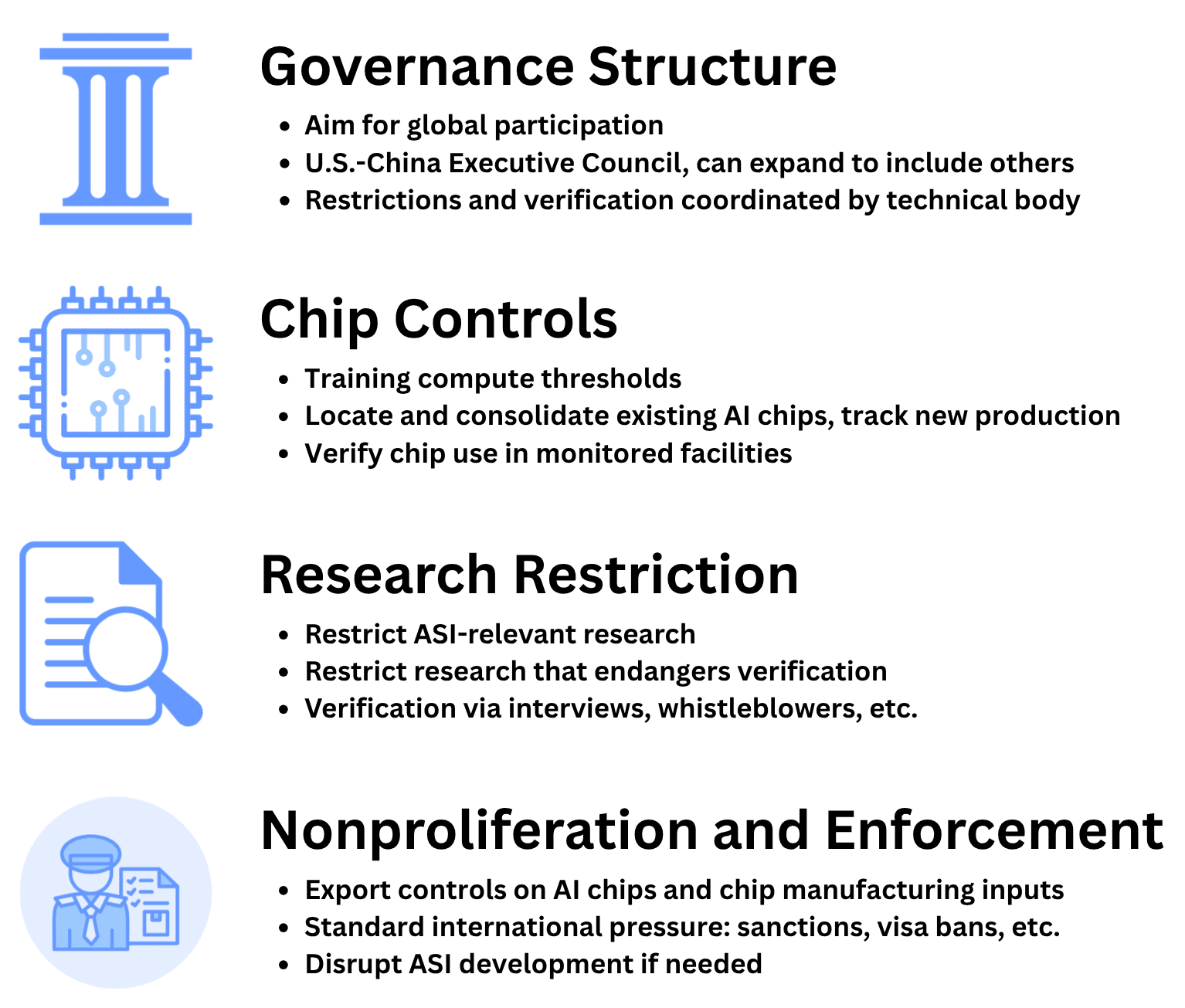

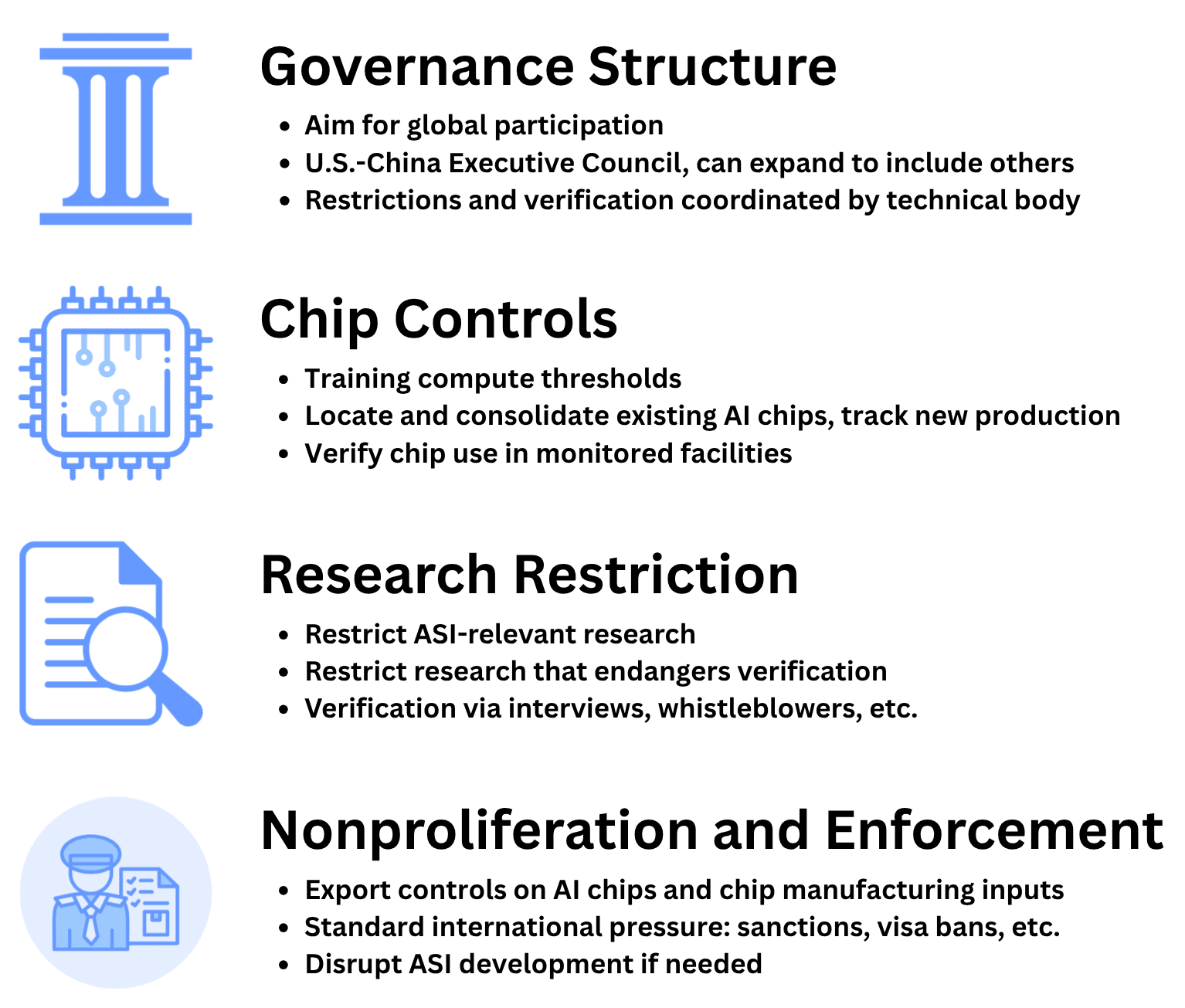

TLDR: We at the MIRI Technical Governance Team have released a report describing an example international agreement to halt the advancement towards artificial superintelligence. The agreement is centered around limiting the scale of AI training, and restricting certain AI research.

Experts argue that the premature development of artificial superintelligence (ASI) poses catastrophic risks, from misuse by malicious actors, to geopolitical instability and war, to human extinction due to misaligned AI. Regarding misalignment, Yudkowsky and Soares's NYT bestseller If Anyone Builds It, Everyone Dies argues that the world needs a strong international agreement prohibiting the development of superintelligence. This report is our attempt to lay out such an agreement in detail.

The risks stemming from misaligned AI are of special concern, widely acknowledged in the field and even by the leaders of AI companies. Unfortunately, the deep learning paradigm underpinning modern AI development seems highly prone to producing agents that are not aligned with humanity's interests. There is likely a point of no return in AI development — a point where alignment failures become unrecoverable because humans have been disempowered.

Anticipating this threshold is complicated by the possibility of a feedback loop once AI research and development can [...]

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/FA6M8MeQuQJxZyzeq/new-report-an-international-agreement-to-prevent-the

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Experts argue that the premature development of artificial superintelligence (ASI) poses catastrophic risks, from misuse by malicious actors, to geopolitical instability and war, to human extinction due to misaligned AI. Regarding misalignment, Yudkowsky and Soares's NYT bestseller If Anyone Builds It, Everyone Dies argues that the world needs a strong international agreement prohibiting the development of superintelligence. This report is our attempt to lay out such an agreement in detail.

The risks stemming from misaligned AI are of special concern, widely acknowledged in the field and even by the leaders of AI companies. Unfortunately, the deep learning paradigm underpinning modern AI development seems highly prone to producing agents that are not aligned with humanity's interests. There is likely a point of no return in AI development — a point where alignment failures become unrecoverable because humans have been disempowered.

Anticipating this threshold is complicated by the possibility of a feedback loop once AI research and development can [...]

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/FA6M8MeQuQJxZyzeq/new-report-an-international-agreement-to-prevent-the

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.682 epizódok

Manage episode 520129750 series 3364760

A tartalmat a LessWrong biztosítja. Az összes podcast-tartalmat, beleértve az epizódokat, grafikákat és podcast-leírásokat, közvetlenül a LessWrong vagy a podcast platform partnere tölti fel és biztosítja. Ha úgy gondolja, hogy valaki az Ön engedélye nélkül használja fel a szerzői joggal védett művét, kövesse az itt leírt folyamatot https://hu.player.fm/legal.

TLDR: We at the MIRI Technical Governance Team have released a report describing an example international agreement to halt the advancement towards artificial superintelligence. The agreement is centered around limiting the scale of AI training, and restricting certain AI research.

Experts argue that the premature development of artificial superintelligence (ASI) poses catastrophic risks, from misuse by malicious actors, to geopolitical instability and war, to human extinction due to misaligned AI. Regarding misalignment, Yudkowsky and Soares's NYT bestseller If Anyone Builds It, Everyone Dies argues that the world needs a strong international agreement prohibiting the development of superintelligence. This report is our attempt to lay out such an agreement in detail.

The risks stemming from misaligned AI are of special concern, widely acknowledged in the field and even by the leaders of AI companies. Unfortunately, the deep learning paradigm underpinning modern AI development seems highly prone to producing agents that are not aligned with humanity's interests. There is likely a point of no return in AI development — a point where alignment failures become unrecoverable because humans have been disempowered.

Anticipating this threshold is complicated by the possibility of a feedback loop once AI research and development can [...]

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/FA6M8MeQuQJxZyzeq/new-report-an-international-agreement-to-prevent-the

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Experts argue that the premature development of artificial superintelligence (ASI) poses catastrophic risks, from misuse by malicious actors, to geopolitical instability and war, to human extinction due to misaligned AI. Regarding misalignment, Yudkowsky and Soares's NYT bestseller If Anyone Builds It, Everyone Dies argues that the world needs a strong international agreement prohibiting the development of superintelligence. This report is our attempt to lay out such an agreement in detail.

The risks stemming from misaligned AI are of special concern, widely acknowledged in the field and even by the leaders of AI companies. Unfortunately, the deep learning paradigm underpinning modern AI development seems highly prone to producing agents that are not aligned with humanity's interests. There is likely a point of no return in AI development — a point where alignment failures become unrecoverable because humans have been disempowered.

Anticipating this threshold is complicated by the possibility of a feedback loop once AI research and development can [...]

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/FA6M8MeQuQJxZyzeq/new-report-an-international-agreement-to-prevent-the

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.682 epizódok

Minden epizód

×Üdvözlünk a Player FM-nél!

A Player FM lejátszó az internetet böngészi a kiváló minőségű podcastok után, hogy ön élvezhesse azokat. Ez a legjobb podcast-alkalmazás, Androidon, iPhone-on és a weben is működik. Jelentkezzen be az feliratkozások szinkronizálásához az eszközök között.